Threads

Definition:

A Thread also called lightweight process, is basic CPU utilization; it compromises a thread ID, a program counter, a register set, and a stack. A thread is an entity within a process that can be scheduled for execution.

If we want a process to be able to execute on multiple CPUs at a time, to take advantage of the multi-core systems, the process must have several execution-context called threads. A thread is an active entity which executes a part of a process. Multiple threads execute simultaneously with each other which results in the execution of a single whole process. As several threads of a process execute at the same time they are required to maintain coordination with each other. Coordination between the threads is required in order to share system resources like I/O devices, memory, and of course CPU. The threads of a process are the part of the same virtual address space (i.e. they share all virtual to physical mapping), they share all the data, code and file. However, they will be executing the different instruction, they will be accessing the different portion of the address space or different in other ways. A different thread has a different stack, a different stack pointer register, a different Program Counter, and other registers. The Process Control Block (PCB) of a multi-threaded process is more complex than a single threaded process as shown in the image below. It contains all the information which is shared among the threads and separate execution context of all threads.

There are several relationships between user-level threads and kernel-level threads, three most common relationship are:

One-to-one: In this model, each user-level thread is associated with a kernel-level thread. When a user process creates a user-level thread, there is a kernel-level thread either created or already present.

|

| PCB: Single-threaded and multithreaded process |

Benefits of Multithreading

Parallelization: In multi-processor architecture, different threads can execute different instructions at a time, which result in parallelization which speeds up the execution of the process.

Specialization: By specializing different threads to perform the different task, we can manage threads, for example, we can give higher priority to those threads which are executing the more important task. Also in multi-processor architecture specialization leads to the hotter cache which improves performance.

Efficient: Multi-threaded programs are more efficient as compared to multi-process programs in terms of resource requirement as threads share address space while process does not, so multi-process programs will require separate memory space allocation. Also, Multi-threaded programs incur lower overhead as inter-thread communication is less expensive.

Hide Latency: As context switching among the process is a costly operation, as all the threads of a process share the same virtual to physical address mapping and other resources, context switch among the thread is a cheap operation and require less time. When the number of thread is greater than the number of CPU and a thread is in idle state (spending the time to wait for the result of some interrupt) and its idle time is greater than two times the time required for switching the context to another thread, it will switch the switch context to another thread to hide idling time.

Operating system support threads by various abstractions

- A data structure is needed to store thread information which allows us to identify specific thread and keeps track of their resource usage.

- Mechanism to create and manage threads.

- Mechanism to safely coordinate among the threads running concurrently in the same address space. As threads share virtual and physical address spaces, the concurrently running threads may override each other's input or each other's result. It might also be possible that one thread is reading the input while other is writing at the same time.

Synchronisation Mechanisms:

To deal with concurrency issues a mechanism is needed to execute threads in an exclusive manner to ensure threads access data and other resources one at a time, for this, we use a mutex which is nothing but mutual exclusion object which allows multiple threads to share resources like file access or memory access, but not simultaneously.

A waiting mechanism is also needed for threads which are waiting for other threads to complete, specifying what are they waiting for so that they are not required to keeps on checking whether they are allowed to execute the operation or not, they will be notified whenever they are allowed. This type of inter-thread coordination is generally handled by condition variable.

Thread and Thread Creation

A thread data structure contains information about thread identity, register values like program counter, stack pointer, etc, stack and other attributes which help thread management system to manage threads like scheduling threads, debugging threads, etc.

For thread creation, Birrell proposes a Fork call with two parameters proc and args, where proc is the procedure the created thread will start executing with the arguments args. When a thread calls a fork, a new thread is created i.e. a new thread data structure is created with its program counter initialized to the first instruction of the proc argument and args arguments are available on its stack. After the end of execution of the child thread, the result is returned to the parent thread for this we need another mechanism. Birrell also proposes a Join call which takes thread Id as an argument. When Join is called from parent thread after fork call with child's thread id as an argument, it blocks the parent until the child completes its execution, the join call also returns the child's result to the parent thread, at that point all the resources that were allocated to the child is freed and execution of child thread is terminated.

Mutual exclusion API

For mutual exclusion of execution of concurrent threads, the operating system supports mutex. A mutex is like a lock which is used whenever the thread is accessing the data or resources that are shared among different threads. When a thread locks a mutex it has exclusive access to the shared resources. Other threads attempting to lock the mutex (as other threads may also need to perform operations that require exclusive access to shared resources) are not going to succeed and have to wait till the thread which has locked the mutex (i.e. the owner thread) completes its task. The data structure of mutex at least contains information about its lock status (whether the mutex is locked or not), list of all the blocked threads which are waiting for the mutex to be free, i.e. the owner thread to complete its work. The mutex data structure must also maintain some information about the owner thread.

The portion of the code performed by the thread under the locked state of the mutex is called critical section. The critical section contains the code which performs the operations which require only one thread at a time to perform. When the owner thread exits the critical section it releases the mutex and other waiting thread can lock it for their exclusive access to shared resources.

|

| Mutex Data Structure |

To represent a conditional variable there is a data structure to represent a conditional variable. This data structure contains a reference to the corresponding mutex for implementation of wait API and list of all the waiting threads for implementation of signal and broadcast API. There is a wait construct which takes mutex and condition as arguments. There is a Signal construct which takes condition as an argument and notifies one waiting thread on the fulfillment of the condition. There is also broadcast construct which notifies all the waiting threads on the condition.

In the implementation of the wait API, first the associated mutex is released and the thread is pushed into the waiting thread list present in the data structure. Then it waits for the notification, at some point when the notification is received, it removes the thread from the waiting queue and re-acquire the mutex and finally exits the execution of wait construct.

In the implementation of the wait API, first the associated mutex is released and the thread is pushed into the waiting thread list present in the data structure. Then it waits for the notification, at some point when the notification is received, it removes the thread from the waiting queue and re-acquire the mutex and finally exits the execution of wait construct.

Deadlocks

Deadlock is a situation where a set of processes are blocked because each process is holding a resource and waiting for another resource acquired by some other process.

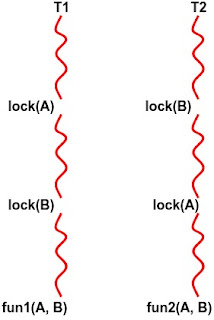

Suppose there are two thread T1 and T2. In order to accomplish their task, fun1 and fun2, for which both of them need two resources A and B. A situation may occur in which one thread say T1 acquires a resource A and T2 on another core (CPU) acquires resource B. As for the successful execution of their respective task they need another resource for which both threads will keep on waiting for another to thread to complete which is not going to happen. We call this situation a Deadlock.

|

| The deadlock between two threads |

Deadlock Prevention

Three common deadlock prevention methods are:- Lock Ordering: Lock ordering is done by ordering the manner in which the resources are acquired which can prevent deadlocks in some cases like above. Lock ordering is a simple yet effective deadlock prevention mechanism. However, it can only be used if you know about all locks needed ahead of taking any of the locks. This is not always the case.

- Lock Timeout: Another deadlock prevention mechanism is to put a timeout on lock attempts meaning a thread trying to obtain a lock will only try for so long before giving up. If a thread does not succeed in taking all necessary locks within the given timeout, it will backup, free all locks taken, wait for a random amount of time and then retry. The random amount of time waited serves to give other threads trying to take the same locks a chance to take all locks, and thus let the application continue running without locking.

- Deadlock Detection: Deadlock detection is a heavier deadlock prevention mechanism aimed at cases in which lock ordering isn't possible, and lock timeout isn't feasible. Every time a thread takes a lock it is noted in a data structure (map, graph etc.) of threads and locks. Additionally, whenever a thread requests a lock this is also noted in this data structure. When a thread requests a lock but the request is denied, the thread can traverse the lock graph to check for deadlocks.

User and Kernel threads

Kernel threads are supported within the kernel of the OS itself. All modern OSs support kernel-level threads, allowing the kernel to perform multiple simultaneous tasks and/or to service multiple kernel system calls simultaneously. There is no thread management code in the application area. Kernel threads are supported directly by the operating system. Any application can be programmed to be multithreaded. All of the threads within an application are supported within a single process.

User threads are above the kernel and without kernel support. These are the threads that application programmers use in their programs. the thread management kernel is not aware of the existence of threads. The thread library contains code for creating and destroying threads, for passing message and data between threads, for scheduling thread execution and for saving and restoring thread contexts.

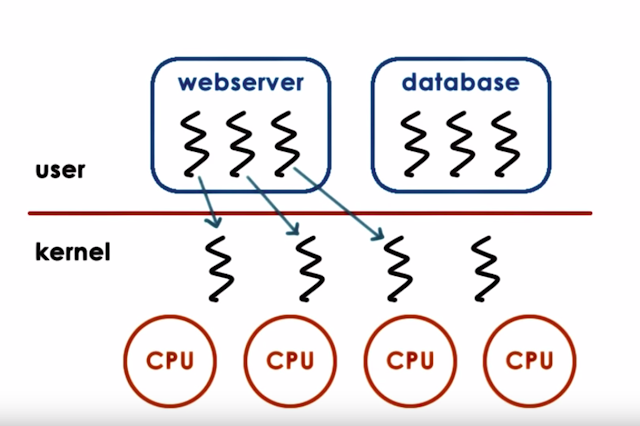

One-to-one: In this model, each user-level thread is associated with a kernel-level thread. When a user process creates a user-level thread, there is a kernel-level thread either created or already present.

|

| one-to-one multithreading |

- Advantages: Operating system can directly see, manage, synchronize, schedule, or block threads. Since OS already supports the threading application can directly take advantage of this support.

- Disadvantage: For every operation, the application has to do a system call which involves changing the user to kernel mode which is an expensive operation. This model is not much flexible as applications have to rely on thread support provided by OS. As OS may have limits on the number of threads it can create or may have any other policies.

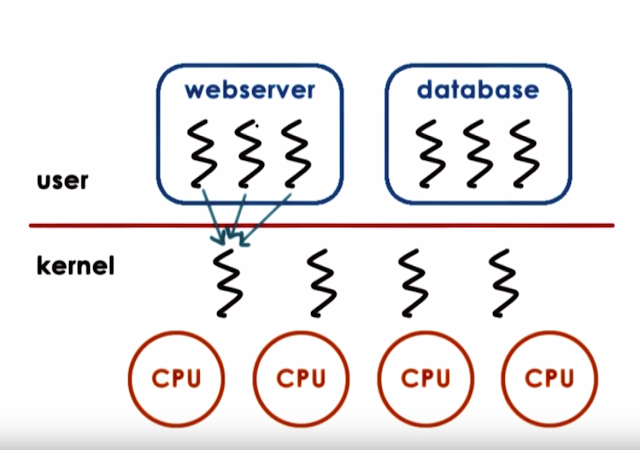

|

| Many-to-one multithreading |

- Advantages: As all of the management is done by thread management library present at the user-level, everything is done by user-level thread library like scheduling, synchronization, context change, etc. So no kernel support is required hence no constraints on limits, policies, etc. Also, we don't need to do frequent system calls which is an expensive task.

- Disadvantages: OS does not have any information regarding the scheduling done at the user-level, so OS may block the entire process if one user-level thread blocks on I/O.

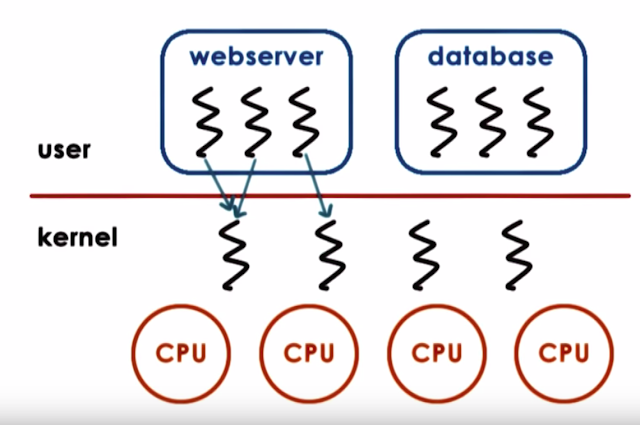

Many-to-many: In this model, some threads are mapped directly to kernel threads while in some cases many threads are mapped to a single kernel thread like many-to-one and are managed by the thread management library at user-level. This model is the best of both many-to-one and one-to-one, and have advantages of both models. However, this model requires coordination between user-level thread manager and kernel-level thread manager.

|

| many-to-many multithreading |

Summary

In this post, we learned about threads, several mechanisms related to them like mutexes, conditional variable. We also discussed briefly deadlocks and different modes of multi-threading, their problems, their solutions, and the various design approaches.

Comments

Post a Comment